$1B AI Notetaker Was Just 2 Guys Listening To Your Calls...

The GC’s Guide to Surviving the Great AI Pretend-ocalypse

👋 Get the latest legal insights, best practices, and legal breakdowns. I cover everything tech companies need to know about legal stuff.

You’ve seen the headlines:

Startup reaches $1B valuation with breakthrough AI automation.

But the story you don’t see: “Breakthrough AI” = two exhausted founders eating Costco pizza, manually performing the AI tasks in a San Francisco living room they can’t afford.

This week’s example? Fireflies.ai’s co-founder admitted they charged $100/month for an “AI notetaker”…that was actually him and his co-founder dialing into meetings silently and typing like court reporters on Monster Energy.

And honestly? Respect.

It worked.

But for GCs?

This is your cautionary tale of the year.

Because if you’re trusting a vendor’s “AI platform,” there is a non-zero chance that behind the model there is a human named Fred who has never passed a background check and is currently taking notes on your confidential board meeting from a futon.

Let’s talk about how to survive this era of AI hype without becoming the star of the next “lol look at this vendor” thread on Twitter.

Lesson 1: The Demo Is a Lie (or at least a work of fiction)

Let me tell you something from years of seeing how the sausage is made: If the founder keeps saying “Our AI does…” but cannot, in fact, show you the “AI” doing anything in a live workflow, you are about to buy a dream and a prayer.

Fireflies admitted it: They validated the business by being the AI.

I’ve seen this movie too:

The “AI contract analyzer” that was actually three offshore paralegals

The “AI red-team tool” that just ran nmap and sent you a PDF

The “AI onboarding chatbot” that forwarded all questions to an intern named Chloe

The “AI policy generator” that was 100% ChatGPT plus some light jazz in the UI

AI vendors think they’re selling vision.

GCs think they’re buying capability.

Reality: everybody is lying to each other politely until renewal.

Lesson 2: If they can’t explain the model, you’re not buying a model

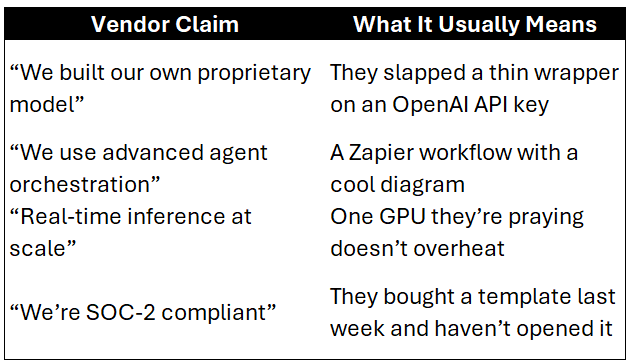

Here’s a vendor red-flag checklist for early-stage AI:

And my favorite: “We can’t disclose the architecture for IP reasons.”

Translation: “We don’t know what the architecture is because the engineer who built it left.”

Lesson 3: The real risk isn’t fake AI, it’s undisclosed humans

Fireflies sent humans into private meetings. Humans listened to strategy, customer info, internal issues, maybe even financials.

Would a regulator call that unauthorized access?

Would a customer call that a breach?

Would your CEO call that “WHY DIDN’T LEGAL CATCH THIS!?”

Yes, yes, and aggressively yes.

Here’s the part people forget: Human-in-the-loop = human-with-your-data.

There is a MASSIVE legal difference between: (a) your confidential information touching an LLM and (b) your confidential information touching Kevin sitting cross-legged on an Ikea rug.

If your AI vendor does not explicitly state whether humans are involved, assume the answer is: “More humans than you think and fewer guardrails than you hope.”

Lesson 4: Vendor diligence is now a full-contact sport

Stop asking AI vendors, “How does your AI work?”

That gets you TED Talk answers. Instead ask:

Who or what touches customer data? (Name every human.) If they hesitate? Red flag.

Do contractors ever see customer data? If the answer is “only sometimes,” the answer is “yes.”

Do you store prompts? For how long? Where? If they say “temporarily,” ask: define temporarily.

How many SOC-2 controls require human action? Look for the smirk that says “most of them.”

Could your system function if every human left tomorrow? If they laugh, your AI is fake.

Lesson 5: The contract is your only actual protection

Here are the clauses that matter:

1. Human involvement disclosure

Make them state, clearly, who touches your data.

2. Data location & residency requirements

You don’t want your board notes in a Dropbox folder labeled “AI training stuff – DO NOT DELETE.”

3. Security posture requirements

Minimum controls. Written. Enforceable.

4. Right to audit AI workflows

If they refuse, they’re hiding Kevin.

5. Post-termination data deletion (with certification)

Not “we think it’s deleted.”

“Here is proof.”

6. No model training on your data

Unless your CEO enjoys surprise features announced on a blog post.

Lesson 6: Startups Validate Manually But Scale Requires Reality

I’m not dunking on Fireflies. Their founder admitted what many founders won’t: they validated manually, survived, and built something real.

That honesty deserves applause. But here’s the nuance your audience cares about:

Manual validation is fine.

Manual production is NOT. Especially when:

customer data is involved

meeting content is confidential

sales calls contain financial projections

employees share HR issues

executives discuss strategy or layoffs

This is where GCs get very twitchy. Because if the “AI notetaker” is typing from someone’s living room, congratulations: You now have unvetted individuals inside your most sensitive conversations.

Lesson 7: Don’t be the GC who approved the AI vendor with “two humans and a dream”

Your CEO wants AI tools. Your product team wants AI tools. Your board wants AI tools.

But when the next Fireflies-style disclosure drops (and trust me, it will) the only question will be:

“Did Legal ask the right questions?”

So ask them. And then ask them again.

And then ask where the founders are currently sitting while the “AI” runs.

Final Thought: AI hype won’t kill companies, undisclosed humans will

The real danger isn’t fake AI. It’s fake AI plus real data.

AI will transform everything. But during this period of “magical AI demos,” “agent orchestration,” and “trust us, it scales,” remember this:

Behind every early-stage AI product is either a GPU cluster…or a guy named Fred eating pizza.

Your job is to know which.

Is it weird if, in some ways, I’d rather have a human Kevin see my data than a LLM?